In the intricate landscape of artificial intelligence, even the tech giants stumble. Recently, Google found itself in the spotlight when its image-generating AI, Imagen, faced criticism for a rather peculiar bias: a failure to depict white people accurately. This glitch sparked a wave of concern, prompting Google to take immediate action.

But what caused this bias in the first place? And how has Google addressed it? This article delves into the complexities of AI bias, examines the “white people” glitch, and explores Google’s efforts to rectify the issue.

The “White People” Glitch: Unmasking the Bias

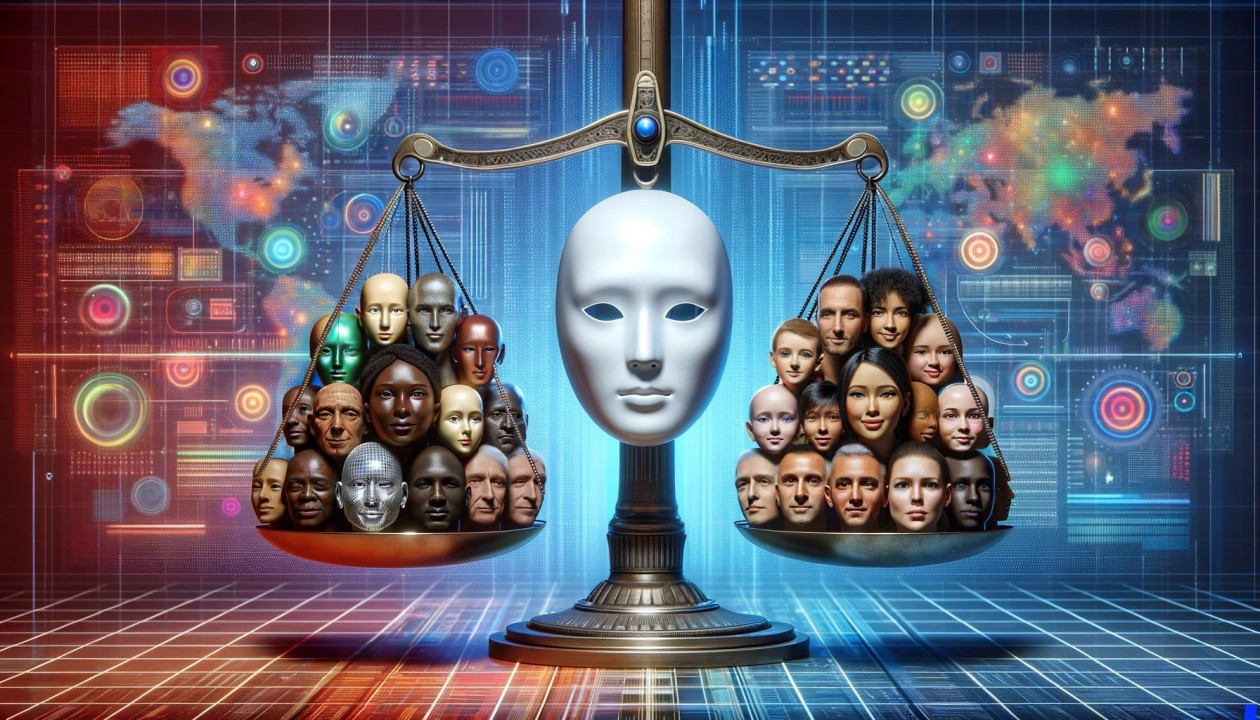

The “white people” glitch manifested in Imagen’s inability to generate images of white individuals consistently. When prompted to create pictures featuring white people, the AI often produced images that were either inaccurate or completely devoid of white individuals. This bias raised eyebrows, as it highlighted the potential for AI to perpetuate and even amplify existing societal biases.

The Root of the Problem: Biased Training Data

The primary culprit behind the “white people” glitch was biased training data. AI models like Imagen learn from massive datasets of images and text. If these datasets are not diverse and representative, the AI can develop biases that reflect the skewed data it was trained on. In this case, it’s likely that Imagen was trained on a dataset that underrepresented white individuals, leading to the AI’s difficulty in generating accurate images of them.

Google’s Response: A Multifaceted Approach

Google took swift action to address the “white people” glitch, implementing a multi-pronged approach to mitigate bias in Imagen.

- Improved Training Data: Google is actively working to improve the diversity and representativeness of its training datasets. This involves collecting and curating images and text from a wider range of sources, ensuring that the AI is exposed to a more balanced representation of the world.

- Bias Mitigation Techniques: Google is also exploring and implementing various bias mitigation techniques in Imagen. These techniques aim to identify and reduce biases in the AI’s outputs, ensuring that the generated images are fair and accurate.

- Transparency and Accountability: Google is committed to transparency and accountability in its AI development process. The company is actively sharing information about its efforts to address bias in Imagen, and it’s encouraging feedback from the community to help identify and rectify any remaining issues.

The Importance of Addressing AI Bias

The “white people” glitch in Imagen serves as a stark reminder of the importance of addressing bias in AI. As AI becomes increasingly integrated into our lives, it’s crucial to ensure that these systems are fair, unbiased, and representative of the diverse world we live in. Failure to do so can have serious consequences, perpetuating and even amplifying existing societal inequalities.

The Ongoing Battle Against Bias

While Google has made significant strides in addressing the “white people” glitch, the battle against bias in AI is far from over. AI is a rapidly evolving field, and new challenges and biases are likely to emerge as the technology advances. It’s essential for tech companies, researchers, and society as a whole to remain vigilant and proactive in identifying and addressing these biases, ensuring that AI serves as a force for good in the world.

Google’s swift response to the “white people” glitch in Imagen demonstrates the company’s commitment to addressing bias in AI. By improving training data, implementing bias mitigation techniques, and prioritizing transparency and accountability, Google is taking important steps to ensure that its AI systems are fair, unbiased, and representative of the diverse world we live in. The battle against bias in AI is ongoing, but Google’s efforts provide a hopeful glimpse into a future where AI serves as a tool for inclusivity and equality.