Artificial Intelligence (AI) has made significant strides in mimicking human visual perception, yet it faces unique challenges when confronted with optical illusions. These visual phenomena reveal fascinating insights into how AI interprets complex visual data compared to the human brain.

Understanding Optical Illusions

Optical illusions deceive the brain by presenting visual cues that lead to misinterpretation. This happens due to the brain’s inherent mechanisms of making sense of the visual world, often filling in gaps or predicting outcomes based on prior experiences. For humans, this results in seeing movement in static images or perceiving depth in flat pictures.

Three main types of optical illusions include:

- Literal Illusions: These combine multiple images into one, tricking the brain into seeing something different from the actual components.

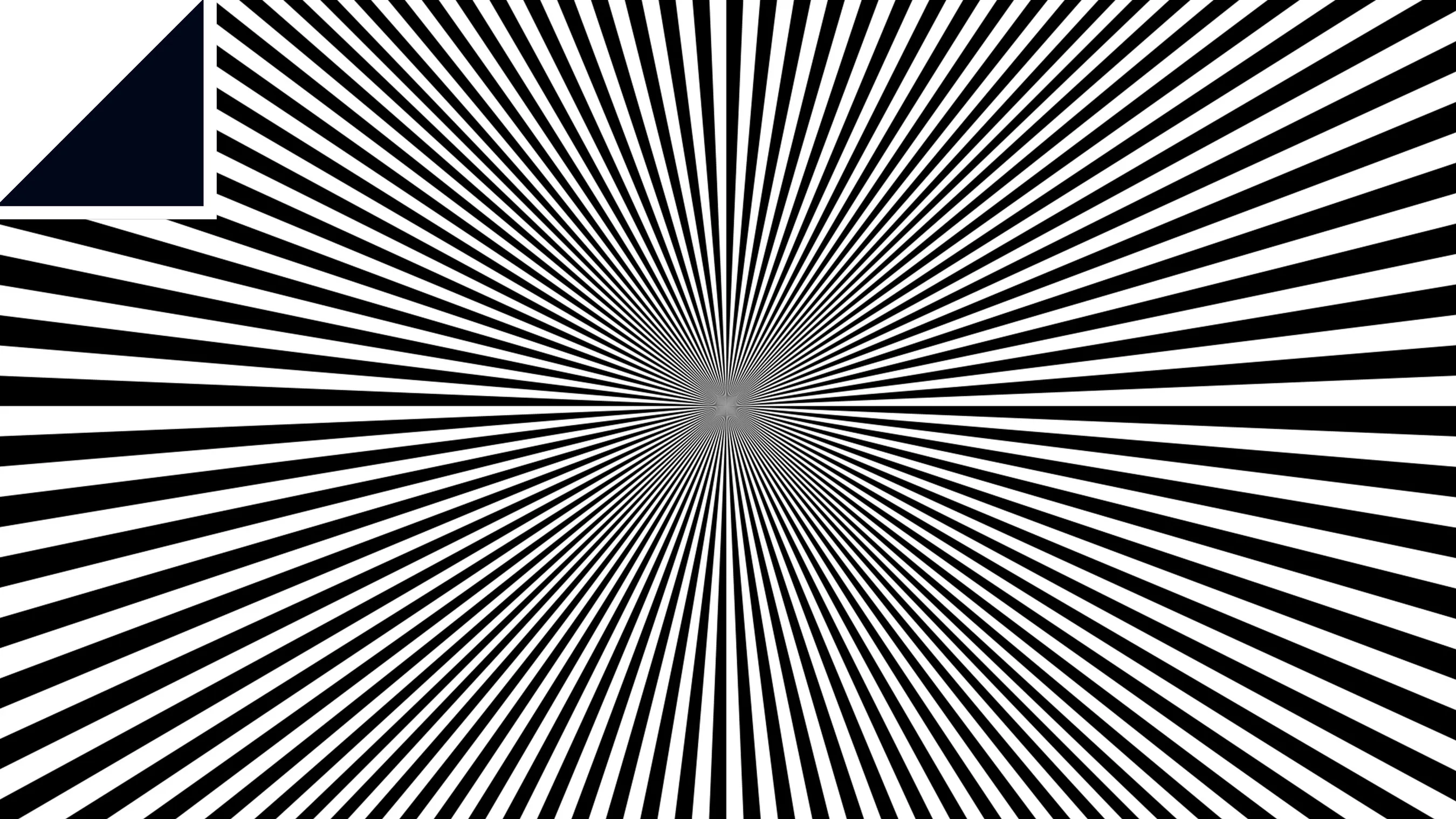

- Physiological Illusions: These result from the overstimulation of the brain’s visual pathways, often through repetitive patterns or high contrast.

- Cognitive Illusions: These involve the brain making incorrect inferences based on learned assumptions, such as the famous Kanizsa triangle where the brain perceives a nonexistent triangle.

AI and Optical Illusions

When AI encounters an optical illusion, it often struggles similarly to humans. Deep neural networks (DNNs), which are designed to mimic human visual processing, can be fooled by illusions. This is because these networks rely on predictive coding, a theory suggesting that the brain constantly makes predictions about the visual world and adjusts based on discrepancies between prediction and actual sensory input.

A study highlighted that DNNs, trained to recognize motion from videos, also perceived illusory motion in static images, such as the “Rotating Snake Illusion”. This indicates that AI’s predictive models can be tricked into seeing motion where there is none, mirroring human perception.

Implications for AI Development

These findings underscore a critical aspect of AI development: the importance of context in visual interpretation. Current AI systems often lack the nuanced understanding of context that humans possess. For instance, slight alterations in an image, like adding a sticker to a stop sign, can mislead AI into misclassifying it entirely.

To improve AI’s robustness against such deceptions, researchers are working on enhancing contextual awareness in AI algorithms. This involves integrating horizontal connections in visual networks that are fine-tuned by contextual clues, potentially making AI harder to fool by optical illusions.

AI’s encounter with optical illusions reveals both the sophistication and the limitations of current artificial vision systems. While these systems can mimic human perception to a remarkable extent, they also share similar vulnerabilities to visual tricks. Advancing AI to handle these challenges involves deepening its contextual understanding and refining its predictive models, paving the way for more accurate and reliable AI applications in the future.