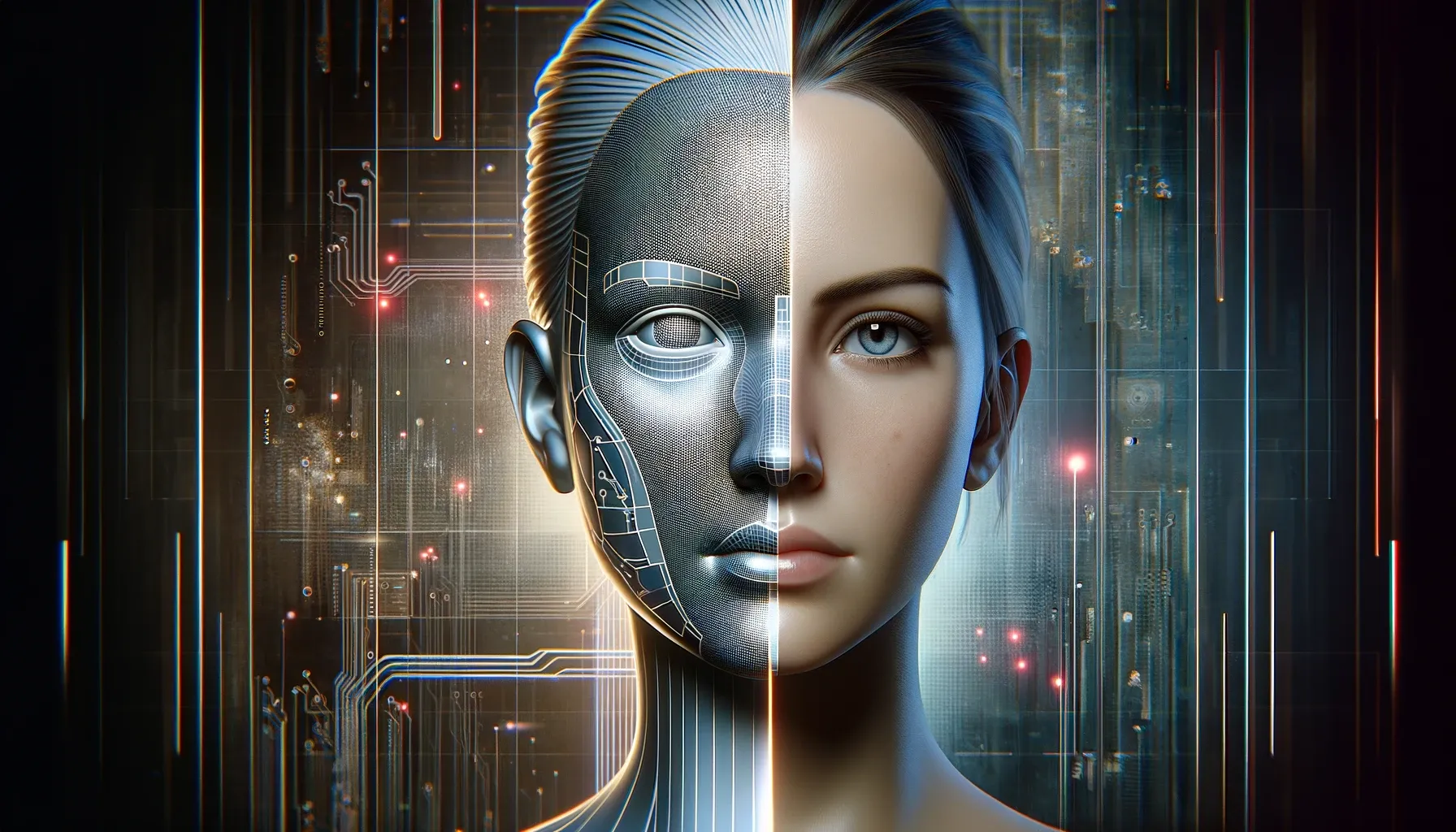

Google’s recent unveiling of Lumiere, an AI video generator capable of producing five-second photorealistic videos from simple text prompts, has captivated the tech world. This groundbreaking technology promises to revolutionize content creation, making it easier than ever for anyone to produce high-quality visuals without requiring extensive skills or resources. However, amidst the excitement, concerns regarding the potential misuse of Lumiere for creating deepfakes, hyperrealistic manipulated videos, have cast a shadow on its revolutionary potential.

Key Highlights:

- Google’s AI video generator, Lumiere, creates photorealistic videos from text prompts.

- Its ease of use and accessibility raise concerns about the potential for malicious deepfakes.

- Experts urge Google to implement robust safeguards and detection tools.

- The debate on balancing innovation with ethical considerations intensifies.

The Power of Lumiere: Creativity at Your Fingertips

Lumiere leverages the power of AI diffusion models, a type of machine learning technique that excels at generating realistic images and videos from scratch. Unlike previous AI video generators that stitch together individual frames, Lumiere creates the entire video in one go, resulting in smoother and more natural-looking footage. This intuitive and accessible approach empowers users with limited technical expertise to bring their imaginative visions to life, opening doors for countless creative applications.

From crafting engaging marketing materials and social media content to visualizing scientific concepts and prototyping user interfaces, Lumiere’s possibilities are vast. Imagine bringing historical figures to life for educational purposes, creating immersive virtual experiences, or even personalizing video greetings with a unique touch. The potential for positive impact across various industries is undeniable.

The Deepfake Dilemma: Blurring the Lines of Reality

However, the very features that make Lumiere so appealing also raise serious concerns about its potential for misuse. The ease of generating realistic videos, coupled with the anonymity often associated with online spaces, creates a fertile ground for the creation and dissemination of deepfakes. These fabricated videos, often used to spread misinformation, damage reputations, or even manipulate elections, pose a significant threat to trust and social stability.

Experts warn that Lumiere could lower the barrier to entry for creating deepfakes, making them more accessible and convincing than ever before. The line between genuine and fabricated content could become increasingly blurred, eroding public trust in online information and fueling social discord.

Striking a Balance: Safeguarding Innovation

Acknowledging these concerns, Google has stated its commitment to responsible development and ethical use of Lumiere. The research paper accompanying the technology outlines the need for safeguards, including bias detection tools and mechanisms to identify malicious use cases. However, critics argue that these measures might not be enough, urging Google to implement stricter controls and consider potential access limitations.

Balancing innovation with ethical considerations is a complex challenge. While Lumiere holds immense creative potential, its misuse could have far-reaching negative consequences. The onus lies on Google and other AI developers to prioritize responsible development, collaborate with experts and policymakers, and implement robust safeguards to ensure that this powerful technology serves humanity for good.

The Road Ahead: A Collaborative Effort

The debate surrounding Lumiere and its potential impact on deepfakes is far from over. It is crucial for all stakeholders, including tech companies, academia, policymakers, and the general public, to engage in open and constructive dialogue. By fostering collaboration, developing effective detection and mitigation strategies, and promoting responsible media literacy, we can harness the power of AI video generation for positive change while minimizing the risks posed by deepfakes.